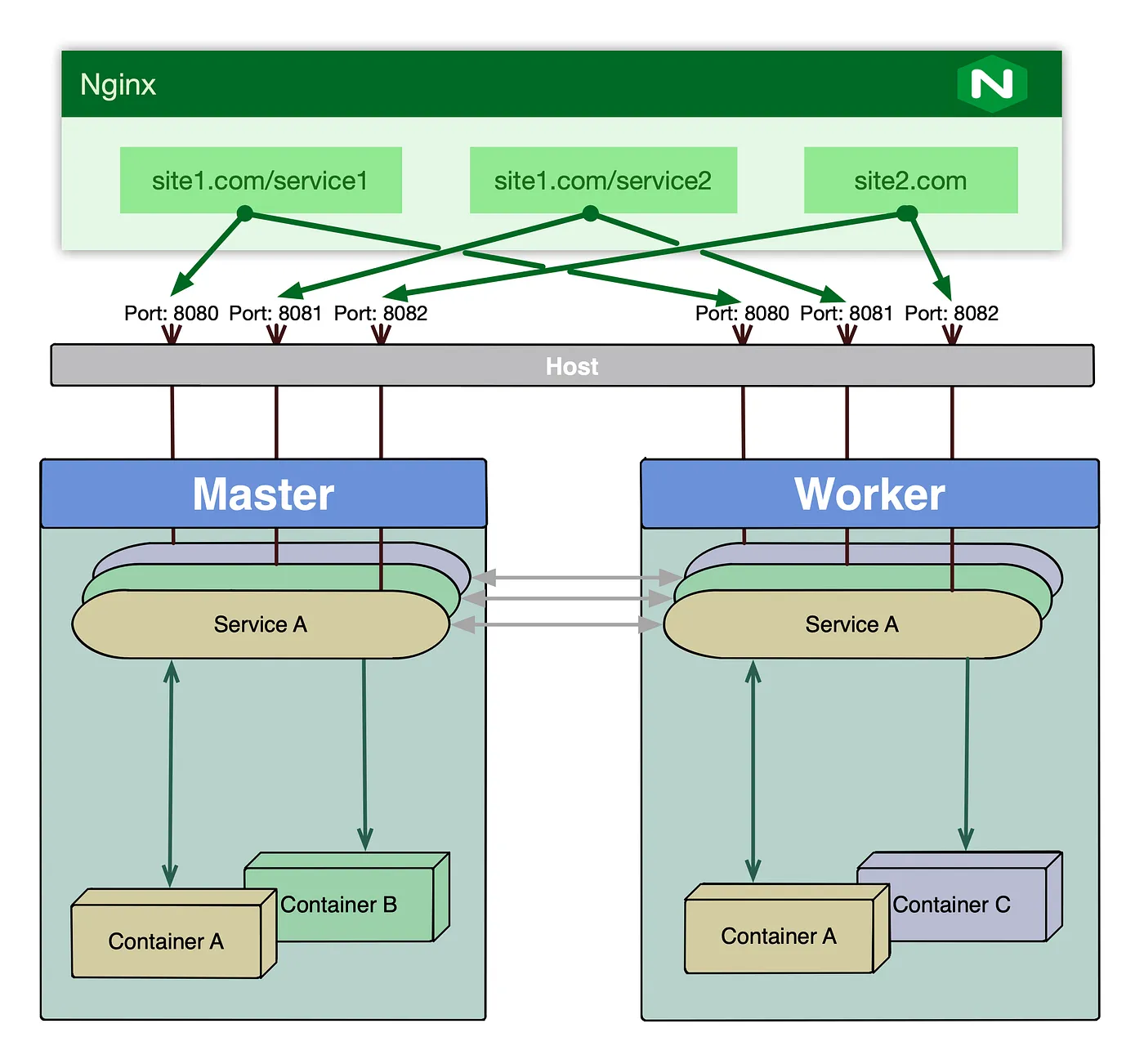

When it comes to accessing applications deployed to the Docker Swarm it is very similar as getting access to the applications deployed to the standalone docker instance: we have to publish a port or ports of the service, so it could be accessed by the external clients.

Then, ideally we set up a reverse proxy server in front of the swarm which from one hand translates the arbitrary port that is served by the Swarm to a virtual server or context in the proxy and from the other hand provides some form of high availability and load balancing.

This works fine as long as number of the services is relatively low. However, after the certain point it becomes fairly hard to manage, from assigning the port numbers to each service, to manually configuring virtual servers and contexts.

In the ideal world what we would want is that service becomes available few seconds after it is deployed without any additional manual effort required.

It appears, that it is relatively easy with the help of few open source products.

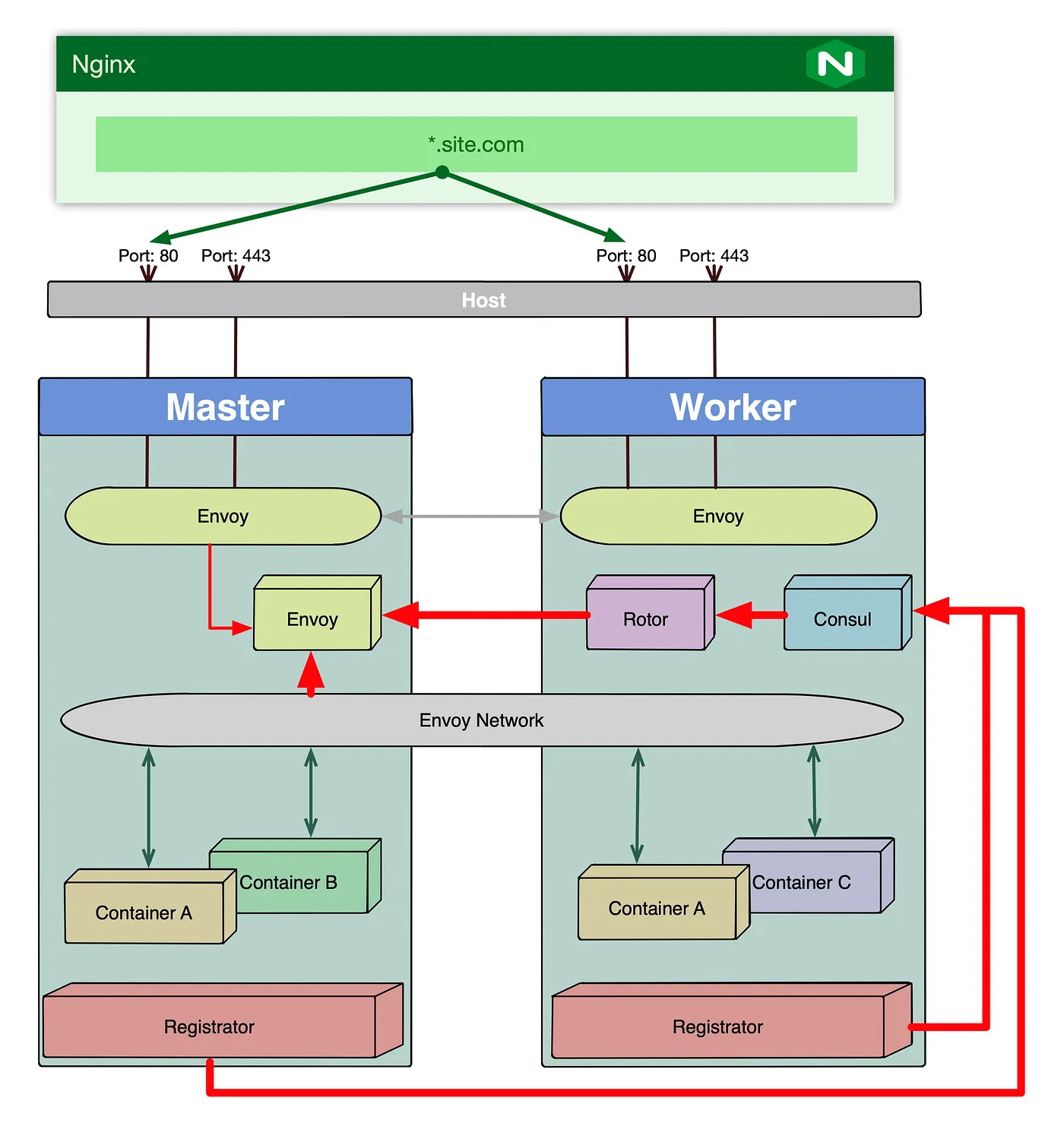

To configure auto-discovery with Envoy Proxy we will use the following products:

- Registrator — a service registry bridge. It registers and unregisters any Docker container as they come online

- Consul — a service mesh solution to connect services across swarm

- Rotor — a bridge between Consul service mesh and Envoy

The way the proposed solution works is that Registrator runs on every node of the swarm constantly monitoring containers as they are being brought up and down. Registrator then feeds the data to consul which builds service definitions for each container in the service that we want to register.

Rotor then attaches to the Consul instance and reads service information building the feeding envoy with the real-time configuration information which is configured to dynamically setup proxy listeners and clusters.

A disclaimer, or actually two:

First, this is one of the many solutions. It works perfectly well in our test environment, but roll it out to production at your own risk.

Second, Rotor is not officially maintained any longer. The company behind it, Turbine Labs has shut down. It is stable software but bear this in mind. Also, there is a pull request is not merged for three years in the Registrator codebase, which is extremely helpful in the above architecture. In essence, it allows to use a specific network to connect Envoy with the underlying services.

In order to access the services in the swarm via the envoy you have to create a wildcard DNS record pointing to the one

of the nodes in the swarm or a load balancer deployed in front of the swarm. Envoy will be listening on the port 80 and

in order to successfully route to a specific service Host HTTP header must be part of the request specifying service

external name.

So, before we begin deploying the stack we want to have an overlay network that will be used exclusively by the Envoy and the services it connects to.

In order to create the new network run the following command:

docker network create -d overlay --attachable --scope swarm envoy

Now, we have to deploy a stack containing a trio of the services: Consul, Rotor and Envoy.

The compose file for the stack will look like the following:

version: '3.7'

services:

consul:

image: consul:latest

command: "agent -server -retry-join consul -client 0.0.0.0 -domain swarm.demo.com -bind '{{ GetInterfaceIP \"eth0\" }}' -ui"

environment:

- CONSUL_BIND_INTERFACE=eth0

- 'CONSUL_LOCAL_CONFIG={"server": true,"skip_leave_on_interrupt": true,"ui" : true,"bootstrap_expect": 1}'

- SERVICE_8500_NAME=consul.swarm.demo.com

- SERVICE_8500_NETWORK=envoy

ports:

- "8500:8500"

networks:

- default

- envoy

deploy:

replicas: 1

placement:

constraints: [node.role == manager]

rotor:

image: turbinelabs/rotor:0.19.0

ports:

- "50000:50000"

environment:

- ROTOR_CMD=consul

- ROTOR_CONSUL_DC=dc1

- ROTOR_CONSUL_HOSTPORT=consul:8500

- ROTOR_XDS_RESOLVE_DNS=true

envoy:

image: envoyproxy/envoy:v1.13.0

ports:

- "9901:9901"

- "80:80"

configs:

- source: envoy

target: /etc/envoy/envoy.json

mode: 0640

command: envoy -c /etc/envoy/envoy.json

deploy:

mode: global

networks:

- default

- envoy

configs:

envoy:

file: envoy.json

networks:

envoy:

external: true

You have to adjust the above example to your own needs. Especially pay attention to the domain in the consul command and

the names of the services. I used swarm.demo.com as an example, which should be replaced with the real one should you

want to try this approach.

Envoy service requires a configuration that should be stored in the same directory as docker-compose.yaml file above.

A configuration file name is envoy.json and it should have the following content:

{

"node": {

"id": "default-node",

"cluster": "default-cluster",

"locality": {

"zone": "default-zone"

}

},

"static_resources": {

"clusters": [

{

"name": "tbn-xds",

"type": "LOGICAL_DNS",

"connect_timeout": {

"seconds": "30"

},

"lb_policy": "ROUND_ROBIN",

"hosts": [

{

"socket_address": {

"protocol": "TCP",

"address": "rotor",

"port_value": "50000"

}

}

],

"http2_protocol_options": {

"max_concurrent_streams": 10

},

"upstream_connection_options": {

"tcp_keepalive": {

"keepalive_probes": {

"value": 3

},

"keepalive_time": {

"value": 30

},

"keepalive_interval": {

"value": 15

}

}

}

}

]

},

"dynamic_resources": {

"lds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "tbn-xds"

}

}

],

"refresh_delay": {

"seconds": 10

}

}

},

"cds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "tbn-xds"

}

}

],

"refresh_delay": {

"seconds": 10

}

}

}

},

"admin":{

"access_log_path": "/dev/stdout",

"address": {

"socket_address": {

"protocol": "TCP",

"address": "0.0.0.0",

"port_value": "9901"

}

}

}

}

To deploy stack run the following command from directory where both of the above files are stored:

docker stack deploy -c docker-compose.yaml envoy

Once the stack is deployed you have to run Registator in the container on each node of the swarm. These containers should not be deployed to swarm and rather started as standalone containers.

This would allow to successfully drain the node if necessary and not leave dangling services behind in the Consul configuration.

To run Registrator execute the following command on each node of the cluster:

docker run -d --name registrator -v /var/run/docker.sock:/tmp/docker.sock --restart unless-stopped --net envoy iktech/registrator -internal=true -cleanup -explicit=true -tags "tbn-cluster" -resync=5 consul://consul:8500

As soon as Registrator containers are up you should be able to access the Consul frontend via envoy straight away using name,

specified as SERVICE_8500_NAME environment variable in the Consul service: consul.swarm.demo.com in our example.

So now, in order to enable service in the swarm to get discovered by envoy the following three conditions should be met:

- a port, which envoy will use as an upstream connection, must be exposed at the image build time using

EXPOSEdirective in the Dockerfile. - container in question attached to the

envoynetwork - container configured correctly by setting the following environment variables

SERVICE_<Port>_NETWORK — in this example it should always be envoy, however in theory same configuration can use number

of different overlay network as long as envoy container is connected to all of them.

Reminder! This option is only available if Pull Request #529 applied to the Registrator codebase.

SERVICE_<Port>_NAME — this is a DNS name of the service in question. Should be resolvable to the swarm node or

the load balancer IP.

SERVICE_<Port>_IGNORE — should be set to true for all exposed ports that should not be proxied.

<Port> for all three above variables is the container exposed port.

For example, let’s say that we have a service with exposed ports 8080 and 5005 and we want it to be accessible

via test-service.swarm.demo.com name. In order to achieve this the following three variables should be added

to the service definition in question:

environment:

- SERVICE_8080_NETWORK=envoy

- SERVICE_8080_NAME=test-service.swarm.demo.com

- SERVICE_5005_IGNORE=true

With this approach there is no need to publish ports of the individual service as they all will be served by Envoy Proxy.